There was a lot of exciting new stuff on show at the Sitecore's recent MVP Summit and Symposium the other week. Plenty of others have written up the general goings on at those events (have a google – there's lots to read), so I thought I'd focus on something more specific that piqued my interest: the novel approach that's being taken to pipelines in some of the new code Sitecore are producing.

url copied!

url copied!

Pipelines are

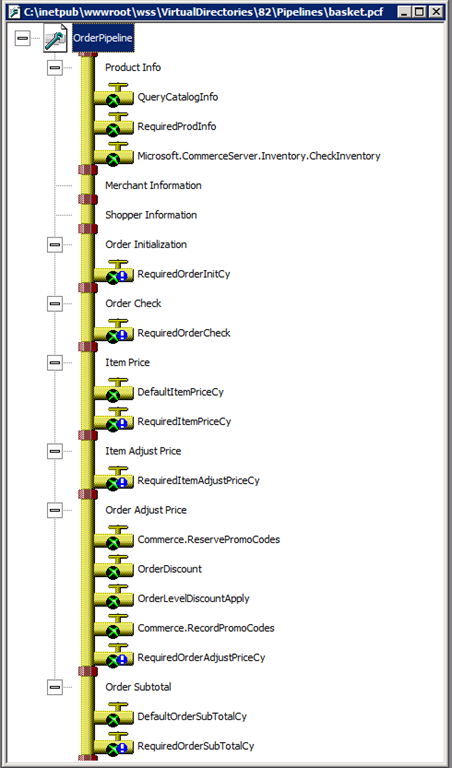

a common concept in Sitecore, but I first came across the idea of a pipeline in code when I started working with a beta of Commerce Server back in 2000. So it's fitting that one of the places Sitecore were showing off their new code was in Sitecore Commerce – the replacement for the old Commerce Server codebase. I've also made use of pipeline patterns in some personal code I've written over the years – most recently in my homebrew replacement for the old Google Reader, which I use to keep on top of the news.

Pipelines are

a common concept in Sitecore, but I first came across the idea of a pipeline in code when I started working with a beta of Commerce Server back in 2000. So it's fitting that one of the places Sitecore were showing off their new code was in Sitecore Commerce – the replacement for the old Commerce Server codebase. I've also made use of pipeline patterns in some personal code I've written over the years – most recently in my homebrew replacement for the old Google Reader, which I use to keep on top of the news.

One of the things that all these pipeline implementations have in common is that they use a single type as the input and output of the overall process, and each step in the pipeline has that type as their input and output. An object goes in the top, passes through each step and then comes out the end. A lot of the time, that's all well and good. But sometimes I've felt that the object being passed through ends up a bit messy as a result of having to store state and data for every step. For example, in Commerce Server's shopping baskets they solved the issue of needing complex data types for the pipeline by using a dictionary object, which acquired more entries and child lists and dictionaries over the course of the execution. And my RSS reader's "processing new RSS Feed items" pipeline suffered from a similar issue. The pipeline data object there ended up with lots of fixed properties to deal with turning an entry from an RSS feed into "safe" title, metadata and content data to record in the database.

So, that background made me very interested in the way some of the new code Sitecore are producing was working. Having seen some presentations, I had a very interesting chat with Stephen Pope in the Symposium hotel bar, which got me interested in reproducing their idea of code-first pipelines which don't need to have the same type for input as it has for output. It seemed to me that I might be able to tidy up some of my code – so I started investigating how it might work for me.

NB: While I'm focusing on pipelines that are wholly defined in code here, I don't mean to suggest that Sitecore are too. The concepts of config files and patches are just things I've not needed in the code I'm interested in

And after a bit of tinkering and thinking about what Stephen had been saying, it turns out you can do quite interesting things with some pretty simple code here...

url copied!

url copied!

public interface IPipelineStep<INPUT, OUTPUT>

{

OUTPUT Process(INPUT input);

}

By declaring different type parameters for the input and the output, we can describe a step that changes the type of the data it's processing...

So a (trivial) concrete example of such a step might turn an integer to a string like so:

public class IntToStringStep : IPipelineStep<int, string>

{

public string Process(int input)

{

return input.ToString();

}

}

A pipeline needs to be able to compose together a set of these steps. The usage I've been thinking about would adopt a "code first" approach to setting these things up. Extension methods give an easy way to achieve this:

public static class PipelineStepExtensions

{

public static OUTPUT Step<INPUT, OUTPUT>(this INPUT input, IPipelineStep<INPUT, OUTPUT> step)

{

return step.Process(input);

}

}

So you can stack together a set of steps using code like:

int input=5;

string result = input

.Step(new IntToStringStep())

.Step(new DoSomethingToAString());

But literal code like that isn't much use if we want to inject a pipeline via DI, so we need a wrapper class which can be used as a base for types that will be be registered for injection. At its most simple, (ignoring the whole business of naming pipelines, patching of pipeline steps etc) that just needs to expose a method to process the data, and set up the pipeline internally:

public abstract class Pipeline<INPUT, OUTPUT>

{

public Func<INPUT, OUTPUT> PipelineSteps { get; protected set; }

public OUTPUT Process(INPUT input)

{

return PipelineSteps(input);

}

}

So you can create a concrete pipeline just by defining the set of steps you need in the constructor. Taking the trivial example from above:

public class TrivalPipeline : Pipeline<int, string>

{

public TrivialPipeline()

{

PipelineSteps = input => input

.Step(new IntToStringStep())

.Step(new DoSomethingToAString());

}

}

Already that's looking interesting, but:

url copied!

url copied!

First up: what if the overall pipeline class above implements the IPipelineStep interface? It's a trivial change:

public abstract class Pipeline<INPUT, OUTPUT> : IPipelineStep<INPUT, OUTPUT>

{

public Func<INPUT, OUTPUT> PipelineSteps { get; protected set; }

public OUTPUT Process(INPUT input)

{

return PipelineSteps(input);

}

}

But it allows you to nest your pipeline objects, so that the top level pipeline can use other pipelines instead of individual steps. So you can build up more complex behaviour from groups of simpler tasks. Continuing with the trivial examples:

public class CompoundPipeline : Pipeline<int, string>

{

public ExamplePipeline()

{

PipelineSteps = input => input

.Step(new AnInitialStep())

.Step(new InnerPipeline())

.Step(new IntToStringStep())

.Step(new DoSomethingWithAString())

}

}

public class InnerPipeline : Pipeline<int, int>

{

public InnerPipeline()

{

PipelineSteps = input => input

.Step(new DoSomethingWithAnInteger())

.Step(new SomethingElseWithAnInteger());

}

}

The second interesting thing that you can do with this approach is that pipeline steps can be decorators that add logic to an inner step. For example, what about a scenario where you want to make a particular step optional, based on some sort of criteria? Rather than baking that logic into the underlying component, you can put the logic into a decorator and wrap it around any component you want to make optional. For example:

public class OptionalStep<INPUT, OUTPUT> : IPipelineStep<INPUT, OUTPUT> where INPUT : OUTPUT

{

private IPipelineStep<INPUT, OUTPUT> _step;

private Func<INPUT, bool> _choice;

public OptionalStep(Func<INPUT, bool> choice, IPipelineStep<INPUT, OUTPUT> step)

{

_choice = choice;

_step = step;

}

public OUTPUT Process(INPUT input)

{

if (_choice(input))

{

return _step.Process(input);

}

else

{

return input;

}

}

}

This step takes a function that operates on the input, to decide whether to run the child step or just pass the output through. Note that unlike the previous steps we've looked at, this one requires that the input and the output are the same type, because otherwise the "skip over" behaviour doesn't work – so this has a type constraint to ensure

INPUT

and

OUTPUT

are related. So if you wanted a particular step to only run if the input was greater than 15 you could use something like:

public class PipelineWithOptionalStep : Pipeline<int, int>

{

public PipelineWithOptionalStep()

{

PipelineSteps = input => input

.Step(new DoSomething())

.Step(new OptionalStep<int, int>(i => i > 15, new ThisStepIsOptional()))

.Step(new DoADifferentThing());

}

}

And it's easy to move from this code to a step which can choose between options for the step to run as well. And of course the optional step or steps you pick from can be entire pipelines themselves... Potentially other things like "exception handling of failing steps" or logging could be wrapped up in that style.

url copied!

url copied!

Edited to add: After a conversation on twitter I also added a gist for a simple example for how a decorator could be used to apply a particular step to all the data in an input enumeration. Plus one for how a decorator could make pipeline steps raise start and end events.

And in the meantime I'm even more interested to see the detail of how Sitecore's code has approached this once it gets released, so I can see how much further they've managed to take it...

↑ Back to top